🤖 Increase your productiveness with AI! Discover Quso: all-in-one AI social media suite for sensible automation.

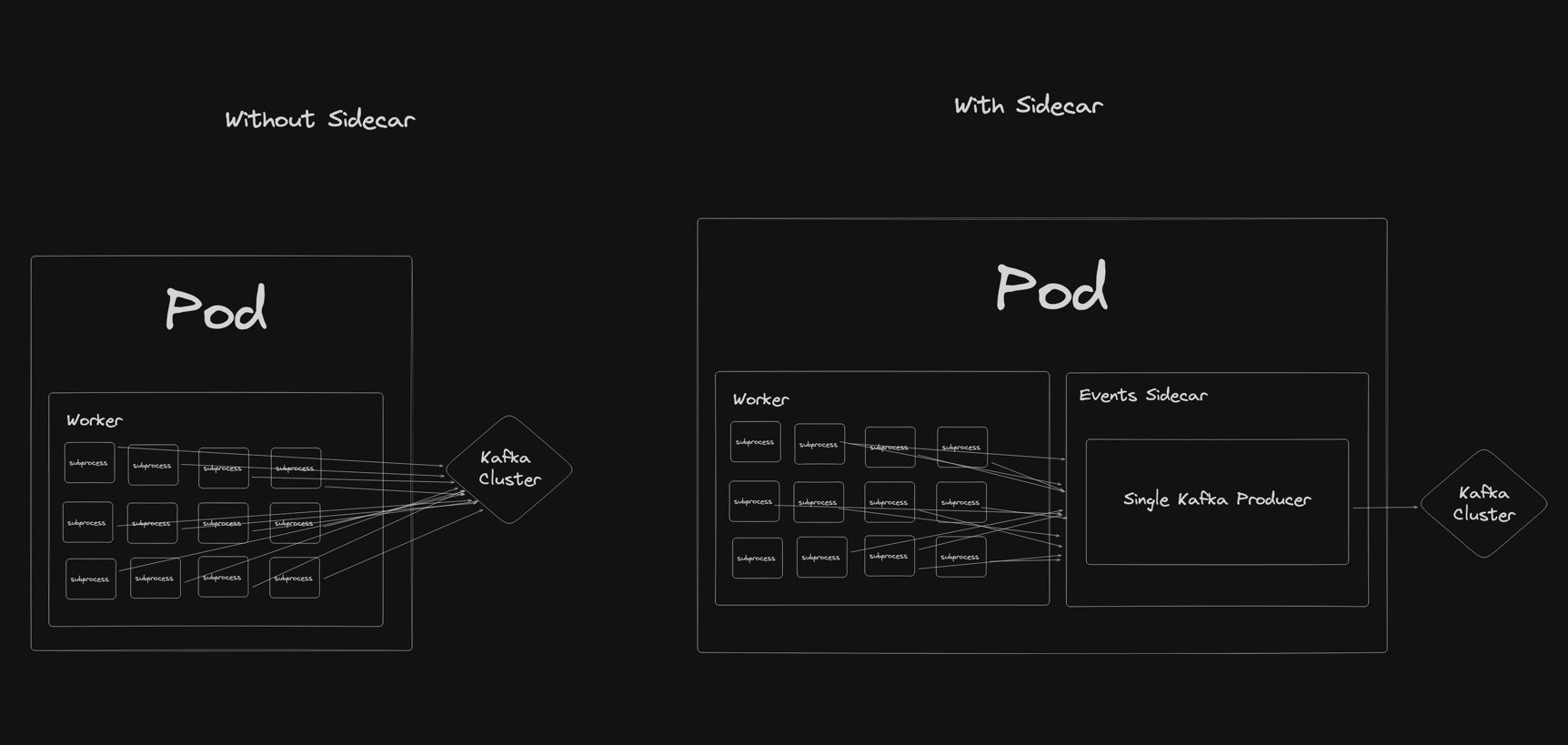

Kafka shoppers open a TCP connection to every dealer with the partitions they need to write to. If an app makes use of multiprocessing, the connection depend multiplies shortly as a result of every subprocess maintains separate connections, as our Kafka library is not fork-safe.

At a big scale, with many partitions throughout many brokers, plus dozens of employee processes per pod and a whole lot of pods, this will simply balloon into a whole lot of 1000’s of reside TCP connections throughout peak hours. As a toy instance, 1000 pods * 10 subprocesses * 10 brokers is 100,000 whole connections.

In addition to socket buffers, brokers preserve per-connection metadata. Collectively, that will increase heap utilization and, below stress, triggers aggressive rubbish assortment (GC). GC spikes CPU, and CPU spikes harm availability, resulting in timeouts and potential knowledge loss.

What did not work (and why)

“Simply add extra brokers?”

Including brokers will increase whole cluster sources and infrequently reduces per‑dealer load. Nevertheless, producers preserve persistent TCP connections to the brokers that host the chief partitions they write to.

As partitions are rebalanced and unfold throughout extra brokers, every producer maintains connections to extra brokers. So, whole connection cardinality throughout the cluster will probably improve, so it doesn’t repair the multiplicative impact from many processes per pod.

“Simply use larger brokers?”

Kafka brokers usually run with a comparatively small JVM heap and depend on the working system web page cache for throughput. Trade steerage generally retains the dealer heap round 4-8 GB.

Including extra machine RAM primarily will increase web page cache, not heap, until you explicitly elevate -Xmx (which often is not helpful past roughly 6-8 GB due to GC tradeoffs).

“Simply add a proxy?”

Database swimming pools like PgBouncer work as a result of SQL classes might be pooled and reused at transaction boundaries, permitting many consumers to share a smaller set of database connections.

Kafka’s protocol, in contrast, is stateful throughout your complete shopper session, assuming a single long-lived shopper per dealer connection for correctness and throughput.

“Simply tune the shopper?”

Tuning linger, idle connection timeouts, batching, and community buffers helped a bit, however did not handle the core multiplicative impact from multiprocessing.

The answer: A producer sidecar per pod

We launched a gRPC sidecar that runs a single Kafka producer for a pod. Utility processes (together with forked staff) ship emit RPCs to the sidecar as an alternative of opening dealer connections.

Why it helped

-

Fewer connections: Consolidates “many producers per pod” into a single producer per pod. In multiprocessing workloads, this may be an order-of-magnitude discount.

-

Preserves ordering: Inside a pod, all writes to a partition circulate by the identical producer, preserving Kafka’s partition-level ordering ensures.

-

Scales horizontally: Every pod has its personal sidecar. Scaling the deployment scales sidecars linearly with out reintroducing per-process fan-out.

-

Secure fallback: If the sidecar is unavailable, the shopper can fall again to direct emission to Kafka to keep away from knowledge loss. That is uncommon in observe and restricted to the affected pod.

-

Light-weight: CPU and reminiscence overhead of the sidecar are tiny for our producer workloads.

-

Minimal refactoring: We would have liked the answer to be as “drop-in” as potential. We did not wish to danger introducing bugs by refactoring present providers, a lot of that are mission-critical, to assist some new sample. Adopting the sidecar solely required changes to configuration, not code.

Outcomes

After migrating our largest deployments to the sidecar:

-

Peak producer connections: Down about 10x cluster-wide at peak.

-

Dealer heap utilization at peak: Down about 70 proportion factors at peak.

-

CPU spikes: The recurring spikes we noticed throughout peak site visitors home windows disappeared post-rollout.

Implementation notes to adapt to your stack

-

Consumer toggle: Add a “sidecar mode” flag to your Kafka shopper wrapper. In sidecar mode, the wrapper makes use of gRPC to emit occasions to the native sidecar.

-

Backpressure and retries: The sidecar producer buffers occasions and enforces per-partition ordering.

-

Metrics: File metrics from each shopper wrapper and sidecar: request counts, queue depth, batch measurement, latency percentiles, and error charges.

-

SLOs: Outline SLOs on sidecar availability and tail latency. Alert on sustained fallback to direct Kafka emits.

-

Compression and batching: Consolidation can improve common batch measurement, which improves compression and reduces community bandwidth.

-

Rollout: Begin with the very best connection offenders (multiprocessing producers). Roll out pod by pod with a function flag. Monitor per-broker connections, GC, and shopper success charges.

Minimal interface (pseudocode)

1

service ProducerSidecar {

rpc Emit(ProduceRequest) returns (ProduceAck);

}

2

ProduceRequest: subject, key (non-obligatory), headers, event_bytes

ProduceAck/Outcome: obtained=true/false, error_code, retry_after_ms

What we constructed alongside the best way (and saved)

-

Consumer and sidecar metrics: Visibility into end-to-end emit latency, success charges, and queueing. These metrics allow us to arrange alerts and perceive how our system performs over time.

-

Emission high quality monitoring: File an try when emitting an occasion and a hit when the dealer acks. Use a light-weight retailer to combination by app and subject. We enabled this for direct Kafka emits to get a baseline “success charge” for asynchronous occasion emission. We might then examine the outdated strategy to our sidecar’s “success charge” as we rolled out to make sure no degradation.

-

Native supply reviews: Whereas the sidecar sample is usually drop-in, one misplaced function is supply reviews. The reviews inform us if the dealer efficiently obtained the emission. We use this to set off fallbacks to a secondary occasion retailer, log errors, or increment our Emission High quality Monitoring success counter.

However because the callbacks at the moment are tied to the sidecar Producer, we do not need entry to the callback on the employee facet. We carried out a separate streaming endpoint on our gRPC server to unravel this. This endpoint sends supply reviews from the sidecar again to the first employee container, permitting shoppers to leverage customized supply callbacks written in no matter language the employee container makes use of. The sidecar maintains a hoop buffer, constantly sending supply reviews over the gRPC stream. On the similar time, the employee runs a separate thread to deal with processing these reviews and operating the callbacks.

Takeaways

-

Rely connections, not simply throughput. Multiprocessing can silently multiply dealer connections, growing the dealer’s reminiscence utilization.

-

Operationally, boring is best than intelligent. A per-pod sidecar retains blast radius small and failure semantics clear. Moreover, adopting the sidecar solely requires configuration adjustments, avoiding further complexity from refactoring.

-

Metrics first. Measuring attempt-to-ack success and tail latency earlier than and after adopting the sidecar patterns offers you confidence as you roll out.

Credit: Robinhood’s discuss on their consumer-side Kafkaproxy sidecar was a serious inspiration to us. Moreover, an enormous due to all members of Workforce Occasions for his or her contributions to this challenge: Mahsa Khoshab, Sugat Mahanti, and Sarah Story.

🚀 Degree up your duties with GetResponse AI-powered instruments to streamline your workflow!