🚀 Able to supercharge your AI workflow? Attempt ElevenLabs for AI voice and speech era!

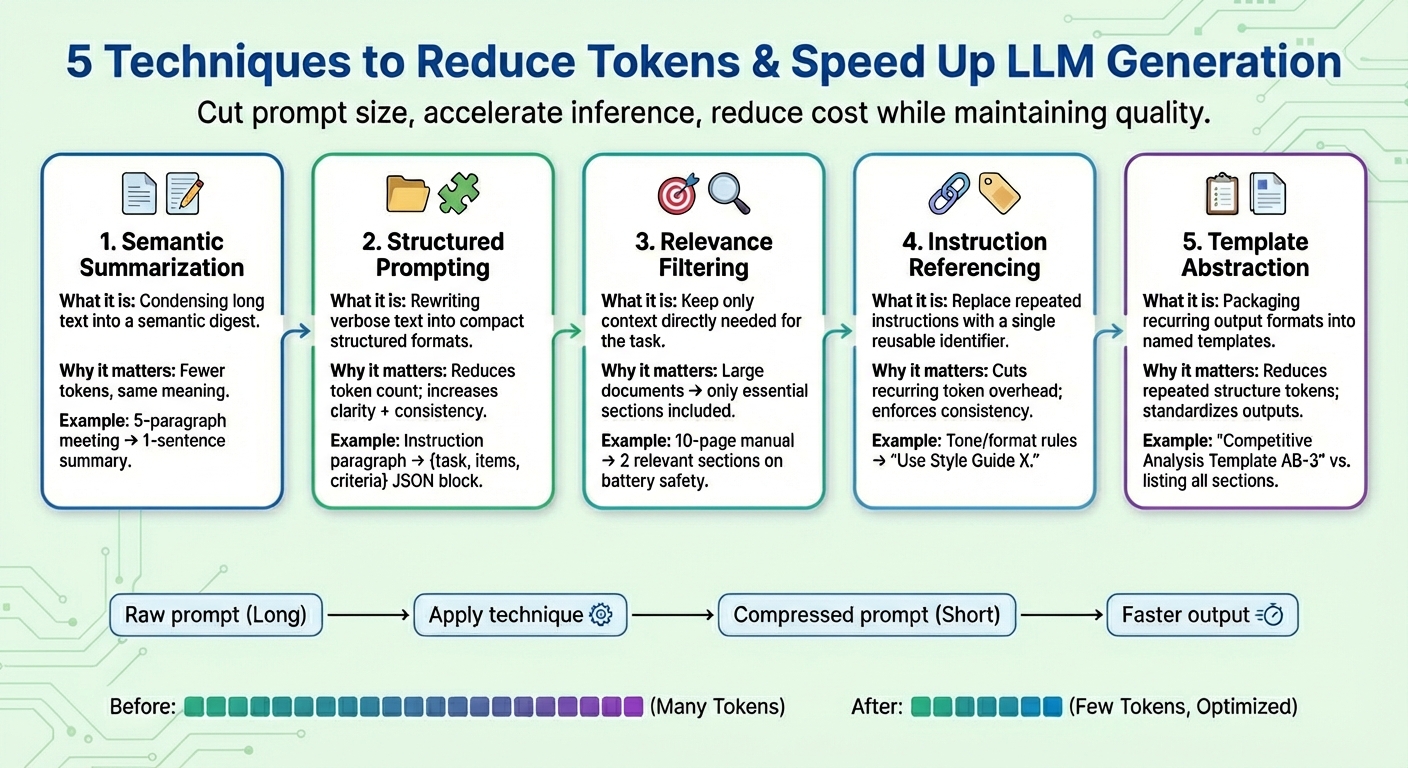

On this article, you’ll be taught 5 sensible immediate compression methods that scale back tokens and velocity up giant language mannequin (LLM) era with out sacrificing process high quality.

Subjects we’ll cowl embody:

- What semantic summarization is and when to make use of it

- How structured prompting, relevance filtering, and instruction referencing minimize token counts

- The place template abstraction suits and how you can apply it persistently

Let’s discover these methods.

Immediate Compression for LLM Era Optimization and Value Discount

Picture by Editor

Introduction

Giant language fashions (LLMs) are primarily skilled to generate textual content responses to person queries or prompts, with complicated reasoning beneath the hood that not solely includes language era by predicting every subsequent token within the output sequence, but additionally entails a deep understanding of the linguistic patterns surrounding the person enter textual content.

Immediate compression methods are a analysis matter that has these days gained consideration throughout the LLM panorama, because of the have to alleviate gradual, time-consuming inference attributable to bigger person prompts and context home windows. These methods are designed to assist lower token utilization, speed up token era, and scale back general computation prices whereas retaining the standard of the duty consequence as a lot as potential.

This text presents and describes 5 generally used immediate compression methods to hurry up LLM era in difficult eventualities.

1. Semantic Summarization

Semantic summarization is a way that condenses lengthy or repetitive content material right into a extra succinct model whereas retaining its important semantics. Reasonably than feeding the complete dialog or textual content paperwork to the mannequin iteratively, a digest containing solely the necessities is handed. The end result: the variety of enter tokens the mannequin has to “learn” turns into decrease, thereby accelerating the next-token era course of and decreasing price with out shedding key data.

Suppose a protracted immediate context consisting of assembly minutes, like “In yesterday’s assembly, Iván reviewed the quarterly numbers…”, summing as much as 5 paragraphs. After semantic summarization, the shortened context might appear to be “Abstract: Iván reviewed quarterly numbers, highlighted a gross sales dip in This autumn, and proposed cost-saving measures.”

2. Structured (JSON) Prompting

This method focuses on expressing lengthy, easily flowing items of textual content data in compact, semi-structured codecs like JSON (i.e., key–worth pairs) or an inventory of bullet factors. The goal codecs used for structured prompting usually entail a discount within the variety of tokens. This helps the mannequin interpret person directions extra reliably and, consequently, enhances mannequin consistency and reduces ambiguity whereas additionally decreasing prompts alongside the way in which.

Structured prompting algorithms might rework uncooked prompts with directions like Please present an in depth comparability between Product X and Product Y, specializing in worth, product options, and buyer scores right into a structured kind like: {process: “examine”, objects: [“Product X”, “Product Y”], standards: [“price”, “features”, “ratings”]}

3. Relevance Filtering

Relevance filtering applies the precept of “specializing in what actually issues”: it measures relevance in components of the textual content and incorporates within the last immediate solely the items of context which might be actually related for the duty at hand. Reasonably than dumping total items of knowledge like paperwork which might be a part of the context, solely small subsets of the data which might be most associated to the goal request are saved. That is one other option to drastically scale back immediate dimension and assist the mannequin behave higher when it comes to focus and boosted prediction accuracy (bear in mind, LLM token era is, in essence, a next-word prediction process repeated many instances).

Take, for instance, a complete 10-page product guide for a cellphone being added as an attachment (immediate context). After making use of relevance filtering, solely a few brief related sections about “battery life” and “charging course of” are retained as a result of the person was prompted about security implications when charging the system.

4. Instruction Referencing

Many prompts repeat the identical sorts of instructions time and again, e.g., “undertake this tone,” “reply on this format,” or “use concise sentences,” to call a couple of. Instruction referencing creates a reference for every widespread instruction (consisting of a set of tokens), registers each solely as soon as, and reuses it as a single token identifier. Every time future prompts point out a registered “widespread request,” that identifier is used. In addition to shortening prompts, this technique additionally helps preserve constant process conduct over time.

A mixed set of directions like “Write in a pleasant tone. Keep away from jargon. Preserve sentences succinct. Present examples.” may very well be simplified as “Use Type Information X.” after which be reused when the equal directions are specified once more.

5. Template Abstraction

Some patterns or directions usually seem throughout prompts — as an illustration, report constructions, analysis codecs, or step-by-step procedures. Template abstraction applies an analogous precept to instruction referencing, but it surely focuses on what form and format the generated outputs ought to have, encapsulating these widespread patterns beneath a template title. Then template referencing is used, and the LLM does the job of filling the remainder of the data. Not solely does this contribute to retaining prompts clearer, it additionally dramatically reduces the presence of repeated tokens.

After template abstraction, a immediate could also be was one thing like “Produce a Aggressive Evaluation utilizing Template AB-3.” the place AB-3 is an inventory of requested content material sections for the evaluation, each being clearly outlined. One thing like:

Produce a aggressive evaluation with 4 sections:

- Market Overview (2–3 paragraphs summarizing trade tendencies)

- Competitor Breakdown (desk evaluating a minimum of 5 rivals)

- Strengths and Weaknesses (bullet factors)

- Strategic Suggestions (3 actionable steps).

Wrapping Up

This text presents and describes 5 generally used methods to hurry up LLM era in difficult eventualities by compressing person prompts, usually specializing in the context a part of it, which is most of the time the foundation reason behind “overloaded prompts” inflicting LLMs to decelerate.

🔥 Need one of the best instruments for AI advertising? Try GetResponse AI-powered automation to spice up your online business!